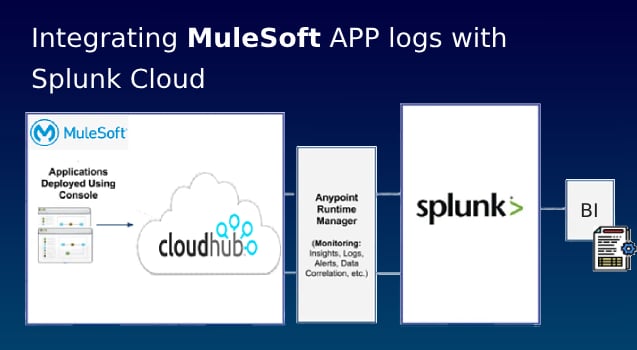

Integrating MuleSoft APP logs to Splunk Cloud

Many Financial organizations use Splunk to retain log records to enable compliance with regulatory record retention requirements. Due to a log requirement for at least 1 year of the retention, organisations who are heavily dependent on 3-tier layered API integrations, choose to send MuleSoft logs (which often has limited retention capacity), to Splunk logging and reporting system.

Target audience for this article are MuleSoft developers, who are seeking major highlights and explanations of the important steps how to integrate MuleSoft with Splunk Cloud.

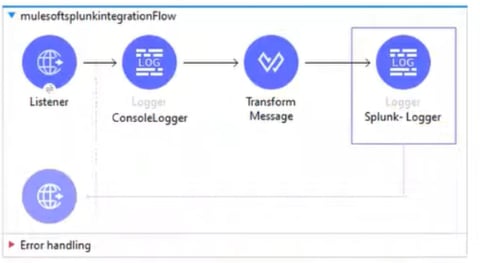

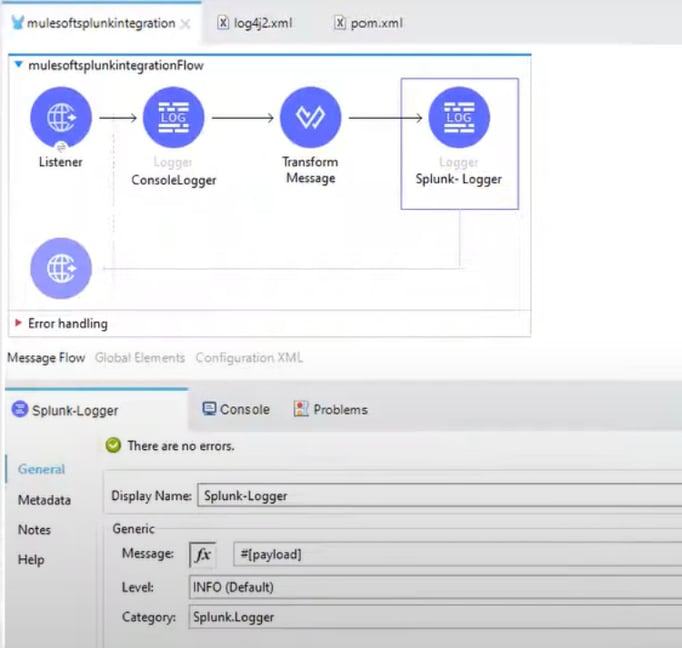

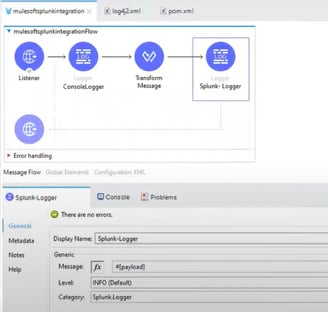

By looking at the Mule flow, we have HTTP Listener and whatever we are receiving in the payload we are logging it into the Console (ConsoleLodger), then we are doing Transformation (Transform Message), prior sending payload to Splunk:

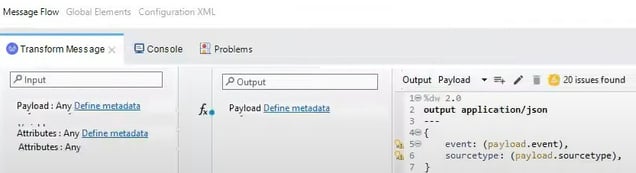

This is the content of the Payload sent to Splunk "event" and "sourcetype" (that also may be expended to more additional fields such as "sent_from" or "date_sent"):

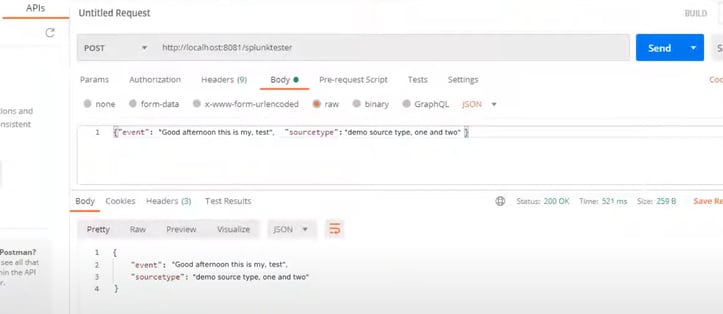

Following is the sample configuration in the Postman in order to send the payload as a test message to Splunk:

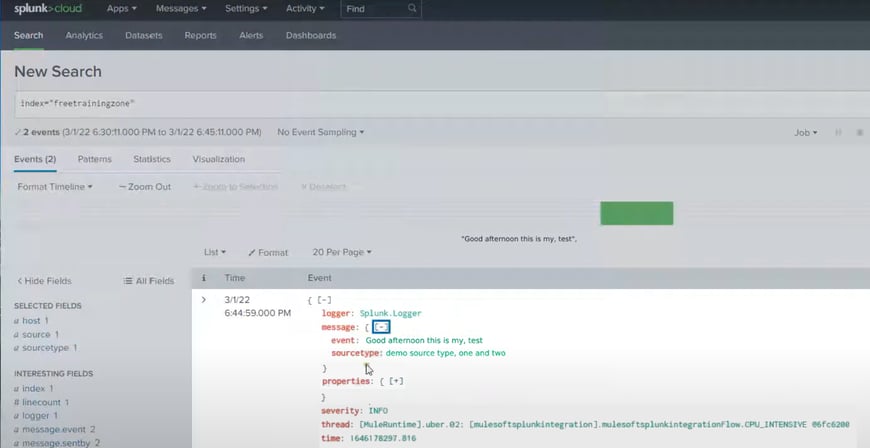

Now, let's check it in the Splunk Index how message is received:

To achieve this, you will need to do a setup in MuleSoft and setup in Splunk Cloud.

Splunk Configuration:

1) Index Setup

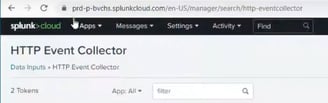

2) Setup HTTP Event Collector

3) Input Settings

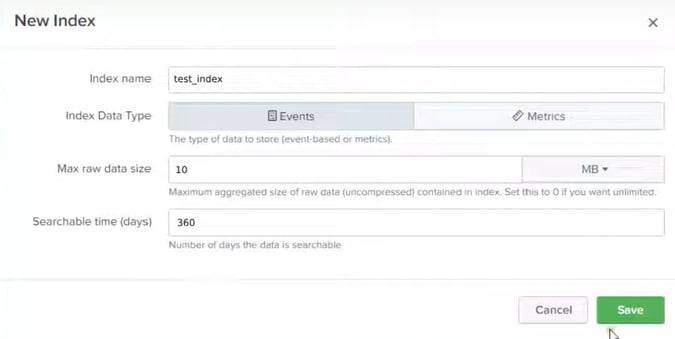

1) Index Setup

Specify index name, max raw data size and searchable time. For searchable time, you may enter days that are required by the retention policy of the company. In this demo case, we have put 1 year (360 days). Save the Index and Index will be created:

1) Create Java logging library to Splunk (pom.xml)

In regards to the Logging framework, you will need to integrate Splunk java logging library with Log4j in Mule flow, due to a additional compatibility requirements. We can disable CloudHub logs and integrate CloudHub application with Splunk logging system by using the Log4j configuration. After we configure logs to flow to both Splunk and CloudHub, we will need to disable the default CloudHub application logs.

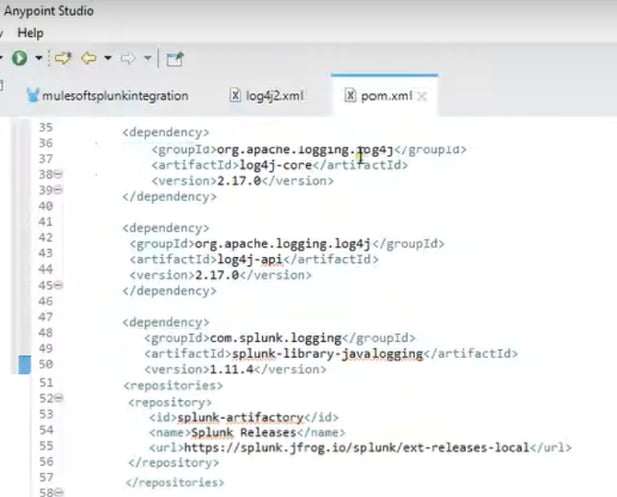

In pom.xml file add 3 dependencies log4j (with the version #), log4j-api and com.splunk.logging and specify repository from where we will be getting Splunk logging:

2) Add Appender in log4j (log4j.xml)

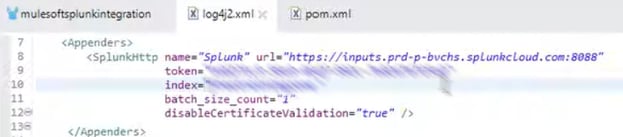

Add "SplunkHttp" appender" in log4j.xml and add the Splunk URL from HTTP Event Collector (copy and paste from Splunk URL). Check with Splunk infra team which HTTP port number to choose or to choose from "Edit Global Settings" Splunk window if you are using free version of Splunk Cloud. Token value and Index name can be selected from Splunk HTTP Event Collector window.

Splunk HTTP Event Collector URL:

It is also very critical to specify Configuration info with packages names (otherwise it will not work and you will get an error):

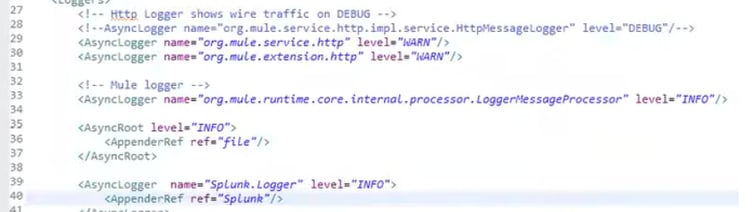

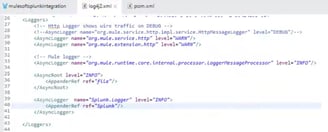

3) Setup AsyncLogger (log4j2.xml)

Setup AsyncLogger and specify the name "Splunk.Logger" from "Category" from Mule flow for the Logger:

"Splunk.Logger" taken from Message Flow for the Logger:

MuleSoft configuration and flow setup:

1) Create Java logging library to Splunk

2) Implement Appender

3) Setup AsyncLogger

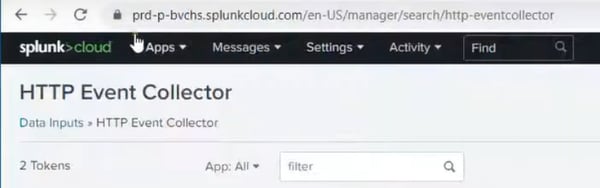

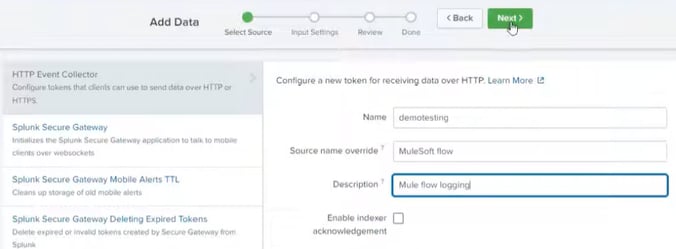

2) Setup HTTP Event Collector

Event Collector in the Splunk is situated under Data>Data Inputs. Make sure you create a separate token for your Index. Click on the "New Token" in top right name it, add provider name and the description:

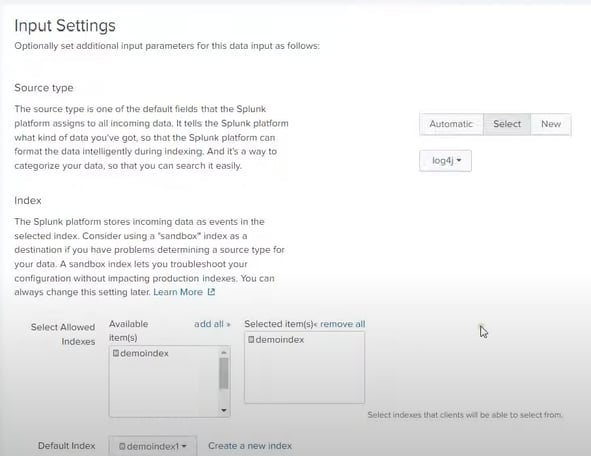

3) Input Settings

Click on "next" and select log4j and select item "demoindex":

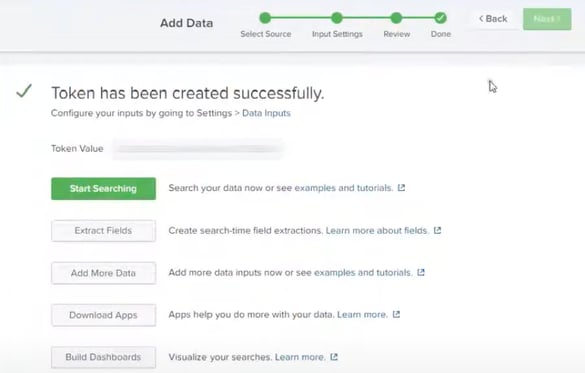

Review, Submit and Token should be created for MuleSoft connectivity:

Do not forget to copy/paste token into "Token Value" under " HTTP Event Collector.